The Way Back to the Terminal: Reclaiming Engineering Leverage

I have always enjoyed writing code.

Not just shipping features that work, but the act itself. Scripting tasks. Automating something annoying. Shaping a system until it feels predictable.

As tools became more visual, more integrated, and more “helpful,” the distance between what I intended and what actually executed quietly grew. This happened gradually, across editors, workflows, and roles.

This year, as AI agents and complex abstractions became the norm, I noticed a shift. I wasn't just using tools; I was managing them. I decided to move back to the terminal as my primary environment, and it made me understand the distance between intent and execution mattered.

The Evolution of the Editor

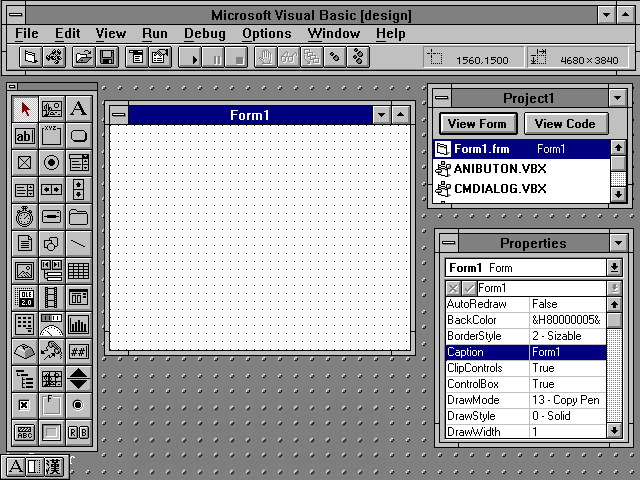

When I was young, I built interfaces in Visual Basic. You could drag buttons onto a canvas and wire up behavior. It was magic. You could shape the computer with a click.

But the "magic" had a ceiling it was limited to the UI capabilities. When I started using Linux, I spent hours debugging why things worked (or didn't). At times it was frustrating, but it was honest and fun. For the first time, I understood the execution behind the walls.

Then I hit a wall of my own: vim. Editing code with vim required learning a new language of commands. I thought, "Why would I want to learn this when I can just use a modern IDE?".

Over the next fifteen years, my tools followed the industry's trend toward convenience.

Dreamweaver made design easy. Sublime was fast. Atom reminded me that customization mattered. VS Code brought world-class stability and integration. These tools are incredible. But lately with AI tools and editors releasing every week, I started to feel like I was managing UIs rather than engineering.

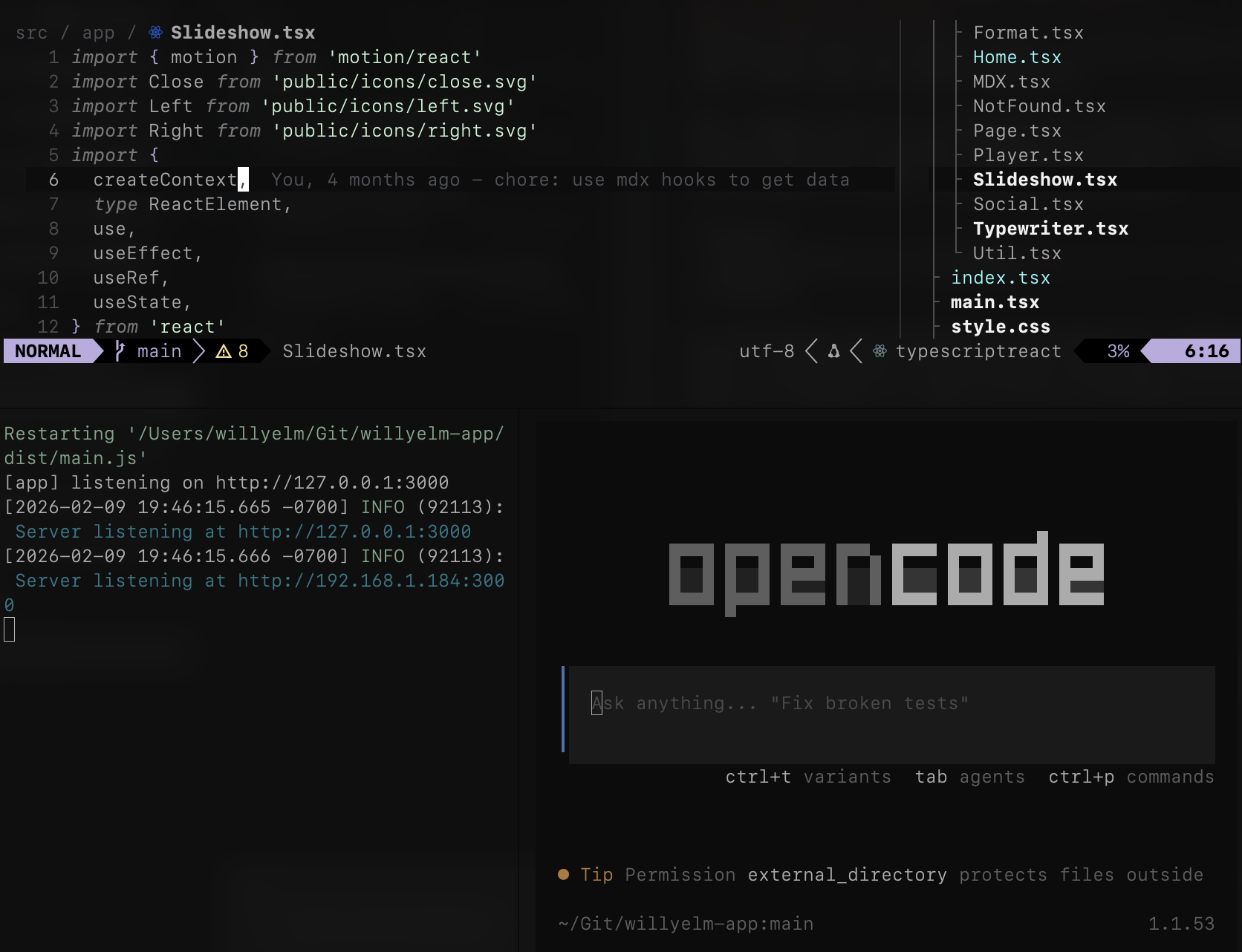

Recently, I went back to where it all started: the terminal, using Neovim. Something I always wanted to master but never had the patience for.

Relearning basic navigation required patience, but it made me shift my mental model. I stopped adapting to a defined GUI and started shaping a system.

The editor stopped being a place I managed and started to become a system to think: a true extension of my brain.

The Distance Between Intent and Execution

In software development, the core lifecycle is stable.

We design, implement, and test. But what varies is the Distance of Execution.

I define "Distance" as every layer of non-transparent logic that stands between an engineer’s idea and the machine’s output.

When you increase this distance, you trade understanding for convenience losing Leverage.

The Abstraction Cost

A typical modern web development workflow looks like this:

- Design: Figma, Design Tokens, Guidelines.

- Context: VS Code for implementation.

- Intermediaries: Sidebars for testing, panels for git, extensions for formatting.

- Magic: Embedded AI assistants refactoring components in the background.

Each tool is "helpful," but the implementation is fragmented. State lives in opaque panels, background processes, and even hidden propietary layers.

When something breaks, you aren't just debugging code; you’re debugging the interaction between multiple different layers. The more tools you add, the bigger the gap between your intent and the actual execution. This is the hidden tax of a high Execution Distance.

Now, compare that to a system-driven workflow. Implementation becomes a single execution surface. Commands are explicit. Scripts encode intent. Most importantly, the distance is short, simple and predictable.

By shortening the distance, we increase our Engineering Leverage. This is the ability to understand, and shape the output. When the distance is long, we are at the mercy of the tools.

AI at the Execution Layer

As LLMs get better at understanding intent, traditional UI layers start to feel heavy. We are moving toward a world where we describe what we want, and the system executes the steps. Being close to the execution surface becomes even more important.

The closer you and AI operate at the execution surface, the easier it is to stay in control. When AI is buried inside a "Black Box" GUI, verification becomes a chore of clicking through menus to see what actually changed.

Embracing AI with a system you control allows you to shape the output with more precision. You gain the speed of the LLMs without sacrificing leverage. You don't even need the latest model to get significant benefits. Even a smaller model can be a powerful assistant when you have the right prompts, scripts, documentation and integration.

The goal isn't to do less with AI; it's to be better at directing what it produces and scale the output.

Engineering Leverage

This isn't an argument against UIs like VS Code or Cursor. They are fantastic products. However, there is a risk of becoming a Managed Consumer who can only operate within the boundaries of what a vendor provides.

In a way working in the terminal feels like going back. But also it feels like moving forward.

The terminal encourages a REPL mindset: try, observe, adjust, script. It forces you to understand the primitives of your craft. Whether you use Neovim or a highly customized VS Code, the objective is the same: reclaim the execution layer.

Choosing your own LLM and integration strategy provides more leverage than a fixed subscription model. More tooling to automate is not always the answer.

As systems grow, clarity becomes more important than convenience. Reducing the distance between intent and execution ensures that your codebase scales with your understanding of it.

When you build your own system step-by-step, the work becomes more predictable and significantly more enjoyable.